- The Deep View

- Posts

- ⚙️ AI cameras were quietly used to detect the emotions of UK train passengers

⚙️ AI cameras were quietly used to detect the emotions of UK train passengers

Good morning. Today’s main story is a little longer than usual — it pertains to the release of a lengthy selection of documents concerning the way AI is being applied to CCTV cameras in U.K. train stations. Read on for the full story.

On a semi-related note, The Deep View is hiring. If you’re interested in joining our team, send an application here.

In today’s newsletter:

📞 AI for Good: How AI is helping people with ALS communicate

⛑️ The challenges to a simple plan for safe AI deployment

📱 Apple’s ChatGPT integration won’t come until 2025

📸 AI cameras were quietly used to detect the emotions of UK train passengers

AI for Good: How AI is helping people with ALS communicate

Photo by John Schnobrich (Unsplash).

Impaired speech, known also as dysarthria, is a common occurrence in people living with ALS. The subsequent challenges they face in communication have begun to give way to a technological solution, one achieved through artificial intelligence.

The details: In 2019, Google’s Project Euphonia partnered with the ALS Therapy Development Institute (ALS TDI) to improve the ability of computers to understand impaired speech.

To help build these tools, ALS TDI recruited people with ALS who were willing to record samples of their speech.

Google then trained its voice recognition models on these voice samples, to enable its systems to recognize impaired speech.

You can watch Project Euphonia in action here.

Voice Preservation: A component of the project — and something that has been advancing since 2016 — involves voice preservation, where an AI model can create a synthesis of your voice before you lose it.

Why it matters: Loss of communication, as one study says, can increase social isolation, reducing quality of life. It also makes it much harder for people to ask for help.

The challenges to a simple plan for safe AI deployment

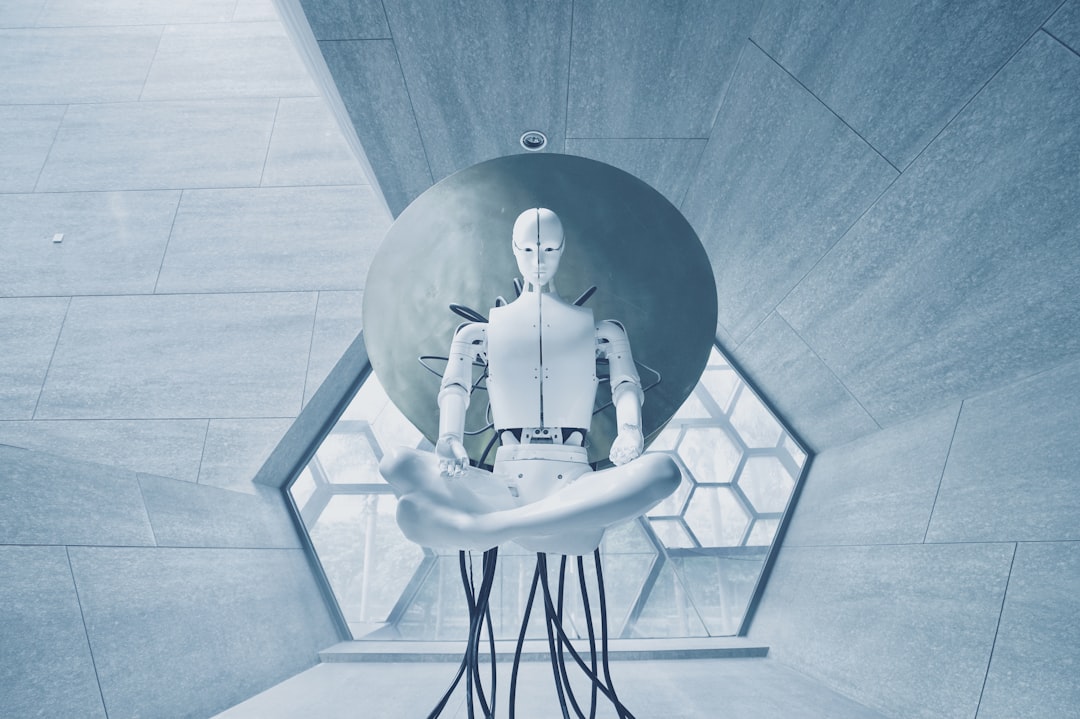

Photo by Aideal Hwa (Unsplash).

As powerful as today’s AI applications might seem, limitations (& hallucinations) are baked into their architecture. Self-driving cars, for example, struggle to detect obstructions, objects and pedestrians, and in general, cannot be adequately trained to respond to edge cases.

Large language models (LLMs), meanwhile, routinely provide incorrect information. Whether this be through the false summarization of an article or faulty responses to specific queries, models’ propensity to make stuff up makes them hard to trust.

Here’s one solution: In a new paper, Tim Rudner and Helen Toner argue that a key way to overcome the problem involves building models that “know what they don’t know.”

This, they said, could be accomplished through some iterations of confidence scores so that “the system could be useful in situations where it performs well, and harmless in situations where it does not.”

The problem is that it’s “impossible to know in advance what kinds of unexpected situations — what unknown unknowns — a system deployed in the messy real world may encounter.”

Why it matters: This has been an active area of research for several years now. And there are certain approaches — Deterministic Methods, Model Ensembling, Conformal Prediction and Bayesian Inference — which, though they have many drawbacks, act as a partial solution to the problem. The authors argue that continuing research into these areas will “play a crucial role” in ensuring the safe, explainable and reliable deployment of generative AI.

Together with SaneBox

Stop Wasting Time Sorting Email

Why bother spending hours organizing your inbox every week when AI can do it for you?

SaneBox — called "the best thing that's happened to email since its invention” by PCMag — is an AI-powered email tool that brings sanity back to your inbox.

SaneBox ensures only important emails land in your inbox, files other emails into folders and even has features that let you hit Snooze and remind you to follow up on emails you sent a few days ago.

Apple’s ChatGPT integration won’t come until 2025

Photo by Arnel Hasanovic (Unsplash).

Apple, according to Bloomberg’s Mark Gurman, is taking a “staggered” approach to the launch of its coming AI-enabled operating system.

The details: While IOS 18 will be packed with AI features at launch, many more significant features likely won’t become available for a while yet.

Among these are Siri’s context and on-screen awareness capabilities, which likely won’t come until 2025, and Apple’s integration with ChatGPT, which likely won’t come until the end of this year.

Apple did not respond to a request for comment regarding the rollout.

The background: Apple unveiled its coming AI integration — dubbed Apple Intelligence — at its WWDC conference last week. Investors rejoiced at the announcement, cheering the stock onto new heights as Apple finally boarded the genAI train. Apple is once again the largest company by market cap in the world.

But it also set off major backlash for a long list of unanswered questions regarding security, data privacy and user choice (which we covered here).

Why it matters: This slower approach could certainly give Apple time to adequately address user concerns, something that could improve the adoption of its new software.

💰AI Jobs Board:

Director, GenAI Research & Development: Henderson Harbor Group · United States · New York City Metro Area · Full-time · (Apply here)

Head of Data Science and Machine Learning: Niche · United States · Remote · Full-time · (Apply here)

Director, Generative AI: Prudential Financial · United States · Newark, NJ · Full-time · (Apply here)

📊 Funding & New Arrivals:

Jump, a startup building AI tools for financial advisors, announced $4.6 million in seed funding.

Tomato AI, a startup working on voice communication, raised $2.1 million in funding.

Cognigy, an AI startup working on customer serve automation, raised $100 million in Series C funding.

🌎 The Broad View:

The FTC is going after Adobe for deceiving customers with a difficult-to-cancel subscription offering (FTC).

Report finds that global audiences are suspicious of AI-powered newsrooms (Reuters).

Wall Street’s fear gauge is at a 5-year low (Opening Bell Daily).

*Indicates a sponsored link

Come work with us

The Deep View family is expanding, and we’d love to have you!

We’re looking for a skilled and passionate writer/editor to report on and write vividly about the intersection of artificial intelligence with a core B2B vertical.

If you’re interested in joining our team & carving out a beat in the AI world, please let us know by applying here, today. We’re looking forward to hearing from you!

AI cameras are quietly being used to detect the emotions of UK train passengers

Photo by Pau Casals (Unsplash).

Over the past two years, Network Rail has been trialing the use of AI-enabled “smart” CCTV cameras across eight major train stations in the United Kingdom.

The purpose behind these trials — first reported in February by James O’Malley — was broadly to increase safety and security at train stations across the country. The software being applied to cameras throughout each test station was designed to recognize objects and activities; if someone fell onto the tracks, or entered a restricted area, or got into a fight with another passenger, station employees would receive an instant notification so they could step in.

The idea was to leverage AI to run a safer, more efficient train station.

The full story: A new set of documents — obtained by Big Brother Watch and first reported by Wired — detail the full scope of the trial.

In one document, which outlines some of the use cases explored by the trial, Network Rail said that it expects no impact on individuals’ right to privacy: “The station is a public place where there is no expectation of privacy. Cameras will not be placed in sensitive areas.”

There is no option for people to opt out of the program, as data, according to the documents, is being “anonymized.”

Another document details feedback on the trial. In one section, the document explores a “passenger demographics” use case, which “produces a statistical analysis of age range and male/female demographics. The use case is also able to analyze for emotion in people (e.g., happy, sad and angry).”

According to the document, there are two possible benefits to this:

It can be used to measure customer satisfaction.

“This data could be utilized to maximum advertising and retail revenue.”

This use case, according to the document, will “probably” be used sparingly and “is viewed with more caution.”

Network Rail did not respond to my request for comment, but told Wired in a statement that it takes security “extremely seriously” and works closely with the police “to ensure that we’re taking proportionate action, and we always comply with the relevant legislation regarding the use of surveillance technologies.”

It is not clear if these systems are currently in use, or at what scale.

Jake Hurfurt, the head of research and investigations at Big Brother Watch, told Wired that the “rollout and normalization of AI surveillance in these public spaces, without much consultation and conversation, is quite a concerning step.”

Why this isn’t great

The technical problem: On a technical level, this kind of technology is just not that good at properly detecting emotions in humans. Part of this is rooted in models’ inherent lack of understanding. The other part revolves around the simple fact that human emotions are really complex.

The ethical problem: The ethical considerations of using this kind of tech are numerous (this paper breaks down 50 of them).

Though the U.K. is no longer a part of the European Union, the EU’s recently enacted AI Act bans emotion recognition in the workplace and in schools.

Several groups, including Access Now and the European Digital Rights group (EDRi), pushed for an all-out ban on emotion recognition, saying that it is a) based on “pseudoscience” and b) incredibly invasive.

The EDRi said that emotion recognition endangers “our rights to privacy, freedom of expression and the right against self-incrimination,” adding that this is of particular concern for neurodiverse people.

Ella Jakubowska, a senior policy advisor at EDRi, told the MIT tech review last year that emotion recognition is “about social control; about who watches and who gets watched; about where we see a concentration of power.”

My view: When I first explored the ethics of AI, this was the kind of issue that I encountered. Not an out-of-control superintelligence, but a concentration of power. This specific instance might be well-meaning, but it establishes a dangerous precedent and points us toward a potentially dark path. The term “Orwellian,” though perhaps overused, comes to mind here.

As Nell Watson, an AI researcher and ethicist, told me a year ago: “There isn't a synchronicity between the ability for people to make decisions about AI systems, what those systems are doing, how they're interpreting them and what kinds of impressions these systems are making.”

Brave Search API: An ethical, human-representative web dataset to train your AI models. *

Trinka: An AI-Powered grammar and writing tool.

Mixo: Create an entire website starting with a short description.

Have cool resources or tools to share? Submit a tool or reach us by replying to this email (or DM us on Twitter).

*Indicates a sponsored link

SPONSOR THIS NEWSLETTER

The Deep View is currently one of the world’s fastest-growing newsletters, adding thousands of AI enthusiasts a week to our incredible family of over 200,000! Our readers work at top companies like Apple, Meta, OpenAI, Google, Microsoft and many more.

One last thing👇

AI is not “producing $200B of value” per year today. It’s closer to $5B.

— François Chollet (@fchollet)

8:21 PM • Jun 16, 2024

That's a wrap for now! We hope you enjoyed today’s newsletter :)

What did you think of today's email? |

We appreciate your continued support! We'll catch you in the next edition 👋

-Ian Krietzberg, Editor-in-Chief, The Deep View