- The Deep View

- Posts

- ⚙️ Google CEO has 'no solution' to AI Overview hallucinations

⚙️ Google CEO has 'no solution' to AI Overview hallucinations

Good morning. I am writing this to the tune of driving rain and rolling thunder. There’s something about May thunderstorms … such a peaceful sound.

Hope you all enjoyed the three-day weekend.

In today’s newsletter:

💰 Elon Musk’s xAI reaches $24 billion valuation on fresh funding round

💸 Musk is planning to build a ‘Gigafactory of Compute’ for xAI

🌎 UN says generative AI might undermine international human rights

🛜 Google CEO has ‘no solution’ to botched AI Search rollout

Elon Musk’s xAI reaches $24 billion valuation on fresh funding round

Created with AI by The Deep View.

xAI — the artificial intelligence start-up launched and run by tech billionaire Elon Musk — on Sunday announced the closure of a $6 billion Series B funding round. The company said the round included participation from Andreessen Horowitz, Sequoia Capital, Fidelity Management & Research Company and Prince Alwaleed Bin Talal, among others.

Musk said in a post that the company’s pre-money valuation was $18 billion, meaning it has now secured a post-money valuation of $24 billion (OpenAI, for context, is valued somewhere between $80 billion and $100 billion).

The details: xAI said that the money will be used to take xAI’s first products to market, “build advanced infrastructure and accelerate the research and development of future technologies.” Musk said in a separate post that "there will be more to announce in the coming weeks.”

Some context: Musk — who has said that the solution to AI risk is to make a “curious” and “truth-seeking” AI — believes artificial general intelligence will be solved next year, two points that many researchers do not agree with.

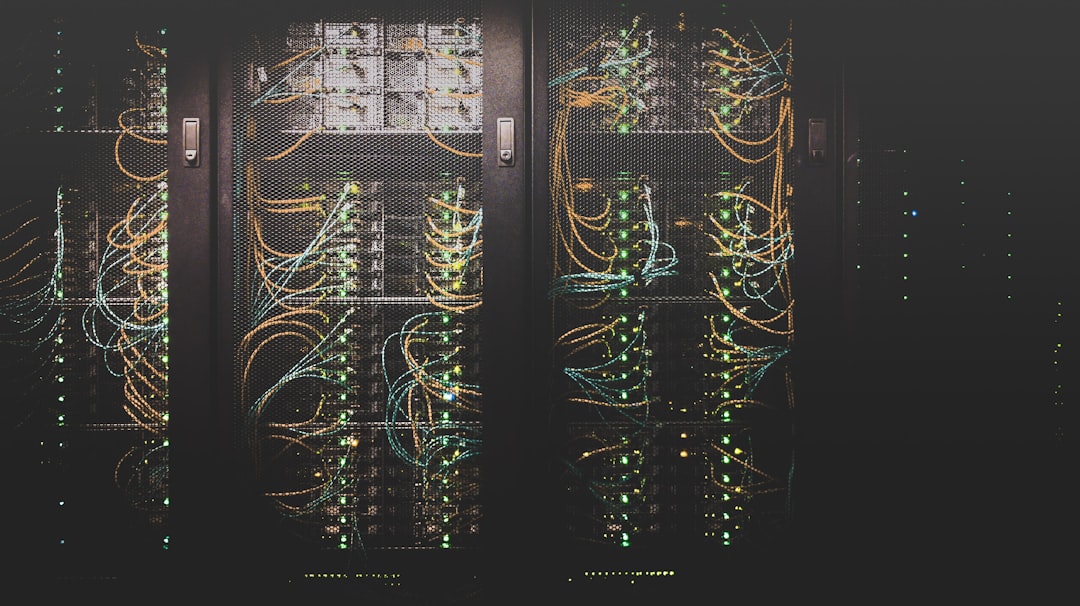

Musk is planning to build a ‘Gigafactory of Compute’ for xAI

Photo by Taylor Vick (Unsplash).

Some of that “advanced infrastructure” that xAI mentions likely involves Musk’s reported plan of building a massive supercomputer that he has taken to calling a “gigafactory of compute.”

The details: Musk, who has said that xAI’s next version of Grok will require 100,000 Nvidia GPUs to train and run, recently told investors that xAI plans to put all those chips together into one enormous supercomputer, according to The Information.

The cost of the chips alone represents a multi-billion-dollar affair; Nvdiai H100 GPUs go for about $30,000 … each.

The Information reported that Musk wants the computer to be up and running by the fall of 2025 and “will hold himself personally responsible for delivering it on time.”

Other super GPU ventures: Musk is far from alone in seeking out the creation of enormous, GPU-loaded supercomputers. Microsoft & OpenAI have been planning a supercomputer that would consist of millions of Nvidia GPUs, which could carry a price tag of $100 million.

Go deeper: These data centers consume an enormous amount of electricity & water; the more massive, GPU-enabled data centers we have, the more data center emissions will continue to increase.

Together with Magical

Work Faster With This Free AI Browser Extension

Have you noticed that so much of the work we do is simply moving information between different websites and apps? That’s true no matter what profession you’re in. We format, personalize and curate information so that other people can use our data.

This is necessary but it’s not where our creativity shines. But with AI, we can finally use automation to speed up these repetitive tasks significantly.

For example, with the free Magical AI browser extension, you can now…

Automatically populate forms and spreadsheets with data instead of manually copy-pasting information

Save and use frequent responses to reply to emails and messages 10x faster while still personalizing them

Write entire emails, messages, and replies quicker than ever with a 1-click, contextual AI writer

You’ll have Magical AI installed in your browser in less than a minute to save you time on 10M+ websites. And it doesn’t store your data so it never leaves your computer.

UN says generative AI might undermine international human rights

Photo by Priscilla du Preez (Unsplash).

In the midst of debates about the positive and negative impacts of AI on society and the ways in which it should be governed and regulated, the United Nations is seeking to change the framing of the conversation. The UN said in a recent paper that generative AI could pose risks to human rights in a framework it argued provides a vital (and simple) approach to AI governance.

AI & human rights risks: The report explores the ways in which generative AI could negatively impact the following list of human rights.

Freedom from Physical and Psychological Harm

Right to Equality Before the Law and to Protection against Discrimination

Right to Privacy

Right to Own Property

Freedom of Thought, Religion, Conscience and Opinion

Freedom of Expression and Access to Information

Right to Take Part in Public Affairs

Right to Work and to Gain a Living

Rights of the Child

Rights to Culture, Art and Science

The details: The report says that data scraping for AI training could violate individual privacy rights while also violating the intellectual property of creators and artists; that genAI models can and have been used to create nonconsensual deepfake porn & can also disseminate false information and supercharge mass misinformation; that genAI models could harm cognitive and behavioral development in children.

A screenshot from the report detailing risks to the rights of the child.

💰AI Jobs Board:

Data Scientist: BCG X · United States · Hybrid · Full-time · (Apply here)

Lead Data Engineer: Typeform · United States · Remote · Full-time · (Apply here)

Artificial Intelligence Engineer: PowerUp Talent · United States · Remote · Full-time · (Apply here)

📊 Funding & New Arrivals:

Biotech firm AltruBio secured $225 million in a Series B round led by BVF Partners.

Biotech company Progentos Therapeutics secured $65 million in Series A funding.

AI-powered language learning app Pratika raised $35.5 million in Series A funding.

🌎 The Broad View:

*Indicates a sponsored link

Together with QA Wolf

Bugs sneak out when less than 80% of user flows are tested before shipping. But getting that kind of coverage — and staying there — is hard and pricey for any sized team.

QA Wolf takes testing off your plate:

→ Get to 80% test coverage in just 4 months.

→ Stay bug-free with 24-hour maintenance and on-demand test creation.

→ Get unlimited parallel test runs

→ Zero Flakes guaranteed

QA Wolf has generated amazing results for companies like Salesloft, AutoTrader, Mailchimp and Bubble.

⭐ Rated 4.8/5 on G2. Ask about our 90-day pilot.

Google CEO has ‘no solution’ to botched AI Search rollout

Created with AI by The Deep View.

Google’s rollout of AI Overviews in Search has been messy, to say the least. In response to several search queries that have since gone viral, Google’s AI Overview said that pregnant women should smoke every day, cooks should add glue to pizza sauce to get the cheese to stick and people should eat rocks.

It’s important to bear in mind that some of these viral instances of AI Overviews were doctored.

Google has been scrambling to manually remove some of these false queries, according to The Verge (queries about getting cheese to stick on pizza no longer seem to trigger an AI Overview). Google maintains that it is still outputting “high-quality information” to users.

Unsolved problems: Just days before these instances started going viral, CEO Sundar Pichai said that AI hallucinations are an “unsolved problem.”

“There are still times it’s going to get it wrong, but I don’t think I would look at that and underestimate how useful it can be at the same time,” he said of AI Overviews.

My take: I might have stumbled onto the solution of AI hallucination. Now, stick with me here, it might be too simple to work.

Just shut it down.

Get rid of AI Overviews, and in general, stop applying hallucinatory generative AI in mediums where hallucinations can’t be tolerated.

I’ve spoken to numerous biotech pharmaceutical companies who have said that hallucinations aren’t a problem (for them) since there is such a careful (human) oversight of AI generations. Here, these models are also being applied in highly specified circumstances, which reduces the odds of hallucination.

But, as Pichai says, hallucination is a part of the LLM architecture —applying it to Search will always result in the quick, easy dissemination of false information. While it might be obvious to most people that eating rocks and glue isn’t a good idea, more subtle dashes of untruths (biased outputs related to history, for example) will cause a ton of damage, poisoning the collective knowledge of global society in the process.

Yoodli: An AI tool to improve your speaking skills.

IzTalk: Real-time, AI-powered language translation.

Translate.video: A tool to transcribe videos into 75+ languages.

Have cool resources or tools to share? Submit a tool or reach us by replying to this email (or DM us on Twitter).

*Indicates a sponsored link

SPONSOR THIS NEWSLETTER

The Deep View is currently one of the world’s fastest-growing newsletters, adding thousands of AI enthusiasts a week to our incredible family of over 200,000! Our readers work at top companies like Apple, Meta, OpenAI, Google, Microsoft and many more.

One last thing👇

Paul Graham on how writing refines your thinking. Couldn't agree more

— Kartikay (@Kartikayb77)

3:58 PM • May 26, 2024

That's a wrap for now! We hope you enjoyed today’s newsletter :)

What did you think of today's email? |

We appreciate your continued support! We'll catch you in the next edition 👋

-Ian Krietzberg, Editor-in-Chief, The Deep View